Search

Category

Latest News

20th September 2024

20th September 2024CI CD Pipeline: Supercharge Deployments, 5x Faster

Docker, your cosmic launchpad, propelling your applications to new heights in the vast expanse of the cloud. I thought of no more wrestling with complex infrastructure, no more complicated infrastructures, no more sleepless nights worrying about compatibility. This is not a dream but most definitely a tangible possibility made possible by Docker.

It has risen to the status of a technology revolution that has significantly changed the way applications are established, packaged, transported and executed in the modern society. It has benefited applications by offering an isolated platform to run through which has eased the development process and made it possible for organizations to hit incredible heights of scalability and efficiency.

This is a container based develop, deploy and run platform. A container is a lightweight, standalone executable package that includes everything an application needs to run: code, runtime, system tools and other libraries and etc. Docker makes it possible to avoid problematic situations when an application runs well on one environment but not on another, commonly known as the ‘it works on my machine’ problem.

Portability: Containers are extremely lightweight and mobile and They are created from Docker Images, and thus can be run on any operating system that supports Docker. This does away with the need of having to make numerous configurations which may lead to compatibility problems.

Scalability & Availability: The applications can be scaled horizontally meaning that containers can be added or can be removed. - This helps ensure end applications can accommodate increased traffic and load and not congest similar to what’s shown in the diagram below.

Efficiency: Docker containers are very light weight and the containers share the host operating system’s kernel, which brings down the overhead and enhances the speed.

Isolation: Hence, each container is a separate entity that is independent of other containers and the host system, thus securing and rightly winning its applicative space.

Consistency: This follows the fact that Docker ensures that the performance of an application is similar across environments and not a case of ‘it works on my machine, but does not on others’. "

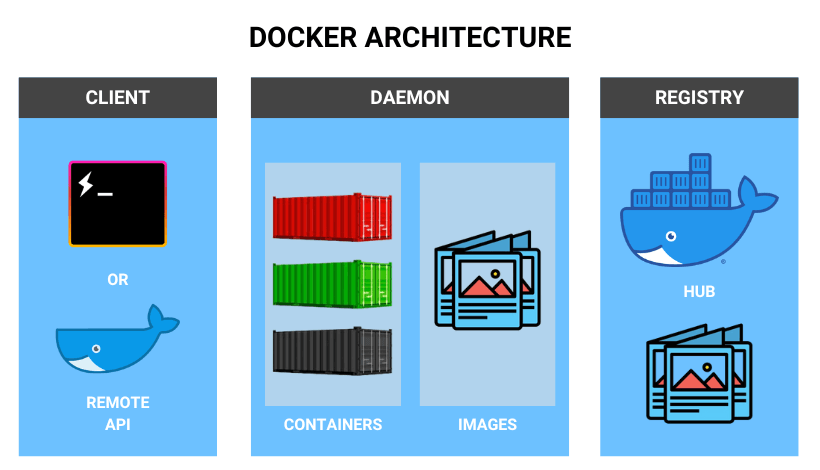

The common one is the multi-tier client server architecture. This is a process that runs on the client side, from where the client issues commands to the daemon as far as the creation, execution and management of the containers is concerned. It works in tandem with the host operating system in order to assign resources and create containers.

Images: A wording/template that is only readable that holds the details of how the container should be created.

Containers: A running instance of Docker image, about which further details will be discussed later in this document.

Docker Compose: This tool enables definition and running of Docker applications in as many containers as needed.

Docker Swarm: This can help manage the Docker containers with a related application and hosting at multiple hosts at once.

Today Docker is the widely used technology within applications development utilized by numerous businesses. Here are a few examples of how Docker is being used:

Microservices Architecture: It is particularly suitable for building microservices-based applications since the architecture entails building small independent services that are independently scalable and upgradable.

Continuous Integration and Continuous Delivery (CI/CD): It can be used for automation of build, testing as well as deployment of applications and hence can be useful for delivery of new features as well as bug fixes.

Cloud-Native Applications: It can also be said that Docker plays a critical role in cloud-native applications since it enables the building and deployment of applications that have certain characteristics inherent to cloud-native applications.

Data Science and Machine Learning: It can be used to construct some kind of data science environments that can be easily copied and shared.

For a beginner in Docker, the most recommended approach will be to download and install Docker and then practice on how to create and launch a container. Here, there are many places you can go to learn Docker, from tutorials, and an official documentation, to Docker user groups and forums.

mkdir express-app && cd express-app npm init -y npm install express

index.jsconst express = require('express'); const app = express(); const port = 3000; app.get('/', (req, res) => { res.send('Hello, Docker!'); }); app.listen(port, () => { console.log(`App running at http://localhost:${port}`); });

Dockerfile# Base image FROM node:18-alpine # Set working directory WORKDIR /app # Copy package.json and install dependencies COPY package*.json ./ RUN npm install # Copy the rest of the code COPY . . # Expose port EXPOSE 3000 # Command to run the app CMD ["node", "index.js"]

docker build -t express-app .

docker run -p 3000:3000 express-app

http://localhost:3000Now your Express.js app is successfully Dockerized and running in a container! 🚀

It is constantly evolving. New features and capabilities are being introduced to the platform regularly, which makes the platform all the more more malleable and mightier. Key trends to anticipate include:Key trends to anticipate include:

Integration with Kubernetes: RedHat OpenShift is generally implemented together with Kubernetes, a renowned containerization engine.

Serverless Computing: This one provides developers with the capabilities of creating applications using serverless technologies, which means that there is no need to deal with infrastructure complexities.

Edge Computing: They said it is becoming crucial for edge computing, in which applications are run near to the data source for faster execution.

Creating, deploying and executing applications which are built on Docker has never been easier. Through the creation of a stable, secure, and closed environment for applications, It has therefore relieved the complexities involved in the use of software and has granted organization the ability they never could have dreamed of attaining before, scalability and efficiency. Docker is a tool you cannot do without if you are planning to integrate modern ways of working into your application development process.

For further learning and resources, check out these helpful links:

Official Website: https://www.docker.com/

Documentation: https://docs.docker.com/

Community Forums: https://forums.docker.com/

Learn how CI/CD pipelines can streamline your Docker workflows: https://www.abusayaf.tech/blog/ci-cd-pipeline

Learn how to use Kubernetes to deploy and orchestrate your Dockerized applications:: https://www.abusayaf.tech/blog/kubernetes-handle-massive-network-traffic

Abu Sayaf

Top-rated freelancer on Upwork. Specialized in NextJS, React, Node.js, Prisma, Docker, AWS, NestJS, and Shopify. Transforming ideas into powerful, scalable solutions with a passion for clean code and innovation.